Viste le recenti uscite sui social in materia di remote working, sento la necessità di condividere con voi una serie di pensieri e di considerazioni che mi frullano nella testa. Il momento storico è particolarmente delicato, la stretta morsa della pandemia si sta allentando e all’orizzonte vediamo la luce. Ma non solo, l’imminente (e chi lo sa in realtà) e totale apertura delle attività spingerà a fare i conti con una scelta tra un “ritorno al passato” o un vero e proprio “rinnovo”.

Nemmeno una pandemia riesce a farci cambiare per il verso giusto

Si leggono esternazioni su quanto i lavoratori dipendenti non siano produttivi o concentrati da casa e, allo stesso tempo, post in cui per alcuni le aziende siano considerate retrograde perché non capiscono il valore aggiunto della pratica del lavoro remoto. Vediamo anche forti critiche a realtà che hanno visto nella pandemia solo una costrizione a vivere una condizione a cui non erano abituate, accuse sulla loro necessità di avere totale controllo delle persone che operano in loco a discapito della salute delle stesse, considerazioni sugli sprechi che potrebbero essere evitati e via discorrendo. Ovviamente da una parte e dall’altra.

Il tono polemico e accusatorio assunto da ambo le parti non porta a nulla e la prima constatazione che mi balza in mente è che nemmeno una pandemia riesce a farci cambiare per il verso giusto. Vale probabilmente in tutti i contesti, ma soprattutto ora viene da chiedersi “perché non stiamo facendo analisi retrospettive sull’accaduto?”. È come se avessimo trattenuto il fiato fino a risalire in superficie e ritornare a respirare. La pseudo conversione culturale che abbiamo affrontato tutti quanti, volenti o nolenti, non è paragonabile a un soffocamento, anzi! È vero che in molti hanno sofferto, tanti non ce l’hanno fatta a superare il momento e tantissimi probabilmente rischieranno di perdere il lavoro. Purtroppo però non possiamo farci nulla direttamente, quindi, per chi ha avuto la fortuna e la forza di passare indenne questo paio di anni, perché non cogliere l’occasione per migliorarsi, per provare a cambiare, per capire le condizioni di tutti gli attori e per fare critiche sì, ma costruttive?

Ancora una volta si finisce sul concetto di cambiamento, non se ne può fare a meno. E nuovamente conflitti sui punti di vista, non per forza complementari ma di certo in contrasto per quanto riguarda il succo centrale della situazione: lavorare fuori dall’ambiente ufficio.

Un datore dovrebbe ragionare ad obbiettivi, ma siamo sicuri che sia sempre corretto affermarlo e che, tra l’altro, sia possibile in ogni caso applicare questo pattern? Un lavoratore dipendente dovrebbe garantire presenza ad orari fissi, indipendentemente da quello che fa, ma siamo sicuri che non ci sia una soluzione migliore? Chi é in team dovrebbe partecipare con gli altri membri nelle stesse fasce di orario, ma se i fusi orari sono differenti? Ho letto su alcuni post che “una grande azienda può definirsi tale se lascia decidere il miglior posto di lavoro al dipendente”, ma siamo certi che tutti i dipendenti vogliano questo e che, più in generale, sia una sentenza corretta in ogni caso? Non so, non ho di certo la conoscenza in mano, ma a mio modo di vedere frasi così dirette e sicure come si leggono qui e là lasciano il tempo che trovano, e si tramutano in critiche non costruttive simil-motivazionali, per fare un po’ di rumore sulle varie piattaforme. Ognuno é e deve essere libero di esprimere il proprio pensiero, ma allo stesso tempo deve essere pronto a ricevere commenti e, sperabilmente, ad affrontare una discussione.

Detto questo, datori e lavoratori dipendenti ne hanno vissute di cotte e di crude. Chi, come il sottoscritto, ha vissuto da entrambi i lati per anni, può capire quanto sia fondamentale eliminare quel muro che si viene a creare tra le parti. Un po’ come vale per DevOps, abbattere i confini, comunicare e condividere, fluidificare, scegliere insieme per il bene e il benessere di tutto e tutti, imparare di continuo dalle esperienze fatte, sono i mantra su cui ogni vita lavorativa dovrebbe basarsi ogni giorno.

Insomma, é vero che lo stress sta uccidendo chi si deve (talvolta) inutilmente recare sul posto di lavoro, spesso lontano. É altresì importante il fatto che il nostro pianeta risenta anche dell’emissione di polveri sottili derivanti dal trasporto autonomo. E infine, é ovvio che il tempo e le energie sprecati per viaggiare rendono chi lavora terribilmente meno performante. Ma vediamo anche l’altra faccia della medaglia: siamo sicuri che lavorare solamente da casa (o da dove ci sentiamo meglio) anche per stare con la nostra famiglia il più possibile, sia veramente la soluzione? Sicuri che la qualità di quel “vedere la nostra famiglia” sia così alta? Dobbiamo pur sempre lavorare e, almeno mio figlio, non é che abbia potuto godersi il suo papà così tanto. Allo stesso tempo, io ho visto in maniera sfocata la sua crescita, non presente mentalmente. Questo non genera stress? Siamo certi che il consumo elettrico, il riscaldamento invernale e il raffreddamento estivo aumentati nelle nostre abitazioni sia così poco dannoso in termini di emissione? Abbiamo tutti controllato che chi ci eroga energia non lo fa, ad esempio, con combustibile fossile? E in inverno, accendiamo magari stufe a pellet, la cui combustione non é per nulla ecosostenibile! Quelle stufe magari potevano starsene spente. Per chiudere, le performance sono migliori evitando gli sprechi della nostra energia in viaggio nel traffico, potendo magari risposare meglio, vero, ma il side effect del lavorare molte ore in più (perché se non si é disciplinati temo si finisca con il lavorare molto più tempo) é proprio quello di ridurre drasticamente le nostre capacità cognitive e di focalizzazione, fatto estremamente deleterio per chiunque lavori con ragionamento, pensiero e creatività.

L’idea è semplicemente basata sul pattern inspect and adapt, ovvero osservare con cura, capire e, sulla base dell’ispezione, adattarsi alla situazione.

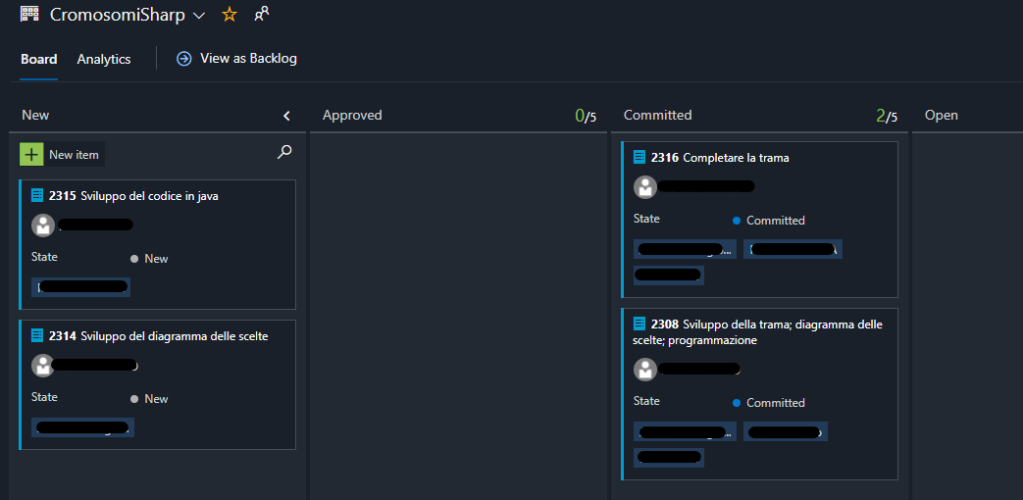

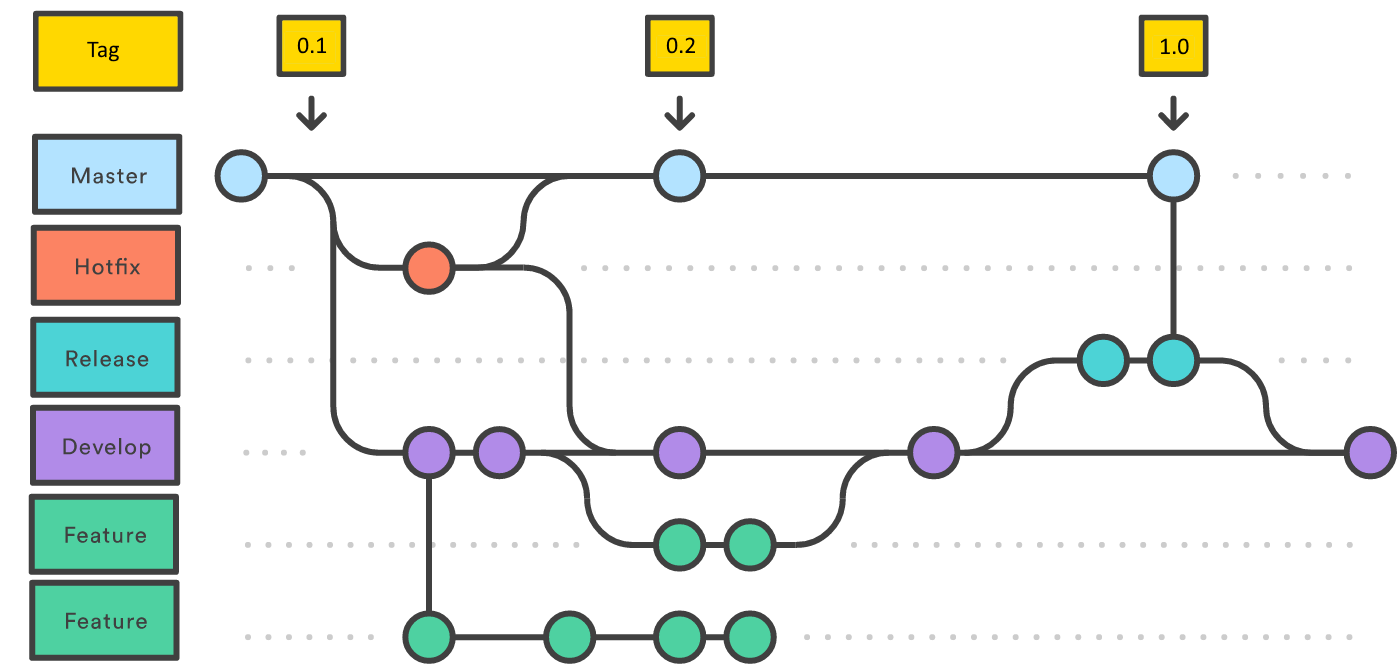

Non dico che vi sia una soluzione definitiva e oggettiva. Non ho il sapere in mano, però mi sono fatto qualche idea negli ultimi tempi sulle persone che fanno parte della nostra realtà e i clienti che ho conosciuto. Come spesso accade, seguire la via ibrida, risulta essere la scelta più efficace ad un costo più che accettabile. Ibridazione ed elasticità sono due caratteristiche a mio avviso fondamentali quando si tratta di rapporto di lavoro e di base per poter parlare di smart (termine più che abusato e utilizzato in maniera errata) ma il tutto dovrebbe essere supportato da un modo di vivere l’azienda consapevole da parte di tutti i membri, dal vertice alla base. E una serie di strumenti collaborativi aiuta ancora di più nella comunicazione dello stile di vita in e con l’azienda. L’idea è semplicemente basata sul pattern inspect and adapt, ovvero osservare con cura, capire e, sulla base dell’ispezione, adattarsi alla situazione. Così facendo si ottiene una personalizzazione elevata sul lavoro delle persone, con un equilibrio tra presenza in ufficio e lavoro remoto, partecipazione in team e fusi orari differenti, il tutto a supporto delle esigenze aziendali e personali e supportato da strumenti collaborativi, come chat (slack, Teams, ecc.), strumenti per videoconferenze (zoom, Teams, meet, ecc.), strumenti di gestione attività e sviluppo (Azure DevOps, Trello, Jira, ecc.) e via discorrendo. In fin dei conti, è importante ascoltare le persone per metterle nelle condizioni di lavorare al meglio, a pro dell’azienda e delle persone stesse. Cosa meglio di una soluzione ibrida può supportare questo?

Concludendo, abbiamo gli strumenti, possiamo affrontare tutto quanto descritto con un cambiamento culturale graduale e non da farsi tutto e subito, dovremmo ridurre sprechi, aumentare il benessere delle persone e quindi anche quello aziendale. Con i dovuti spunti e le osservazioni di ciò che accade nelle nostre realtà, potremmo cogliere finalmente un’occasione per una trasformazione più naturale e meno polemica di come la stiamo vivendo. Avrà dei costi, ma il ritorno dell’investimento credo che sia impagabile.